A team of professors from the Naval Postgraduate School’s (NPS) Department of Applied Mathematics has joined a consortium of leading researchers seeking to revolutionize the world of climate modeling with the construction of a novel and considerably more accurate Earth Systems Model (ESM).

Launched officially in late 2018, the Climate Modeling Alliance (CliMA) – a coalition of scientists, engineers and applied mathematicians from Caltech, Massachusetts Institute of Technology (MIT) and NPS – announced that they had secured funding to build a new and improved climate model (dubbed “CLIMA”) from the ground up. Working with NASA’s Jet Propulsion Laboratory (JPL) towards the bold goal of creating a model that “projects future changes in critical variables such as cloud cover, rainfall, and sea ice extent with uncertainties at least two times smaller than existing models” in five years’ time, the group stands at the beginning of a daunting and potentially game-changing project.

Principal investigator (PI) Tapio Schneider (Caltech) and colleagues propose to reach their goal with the use of several pioneering features, including a combination of increased resolution in select parts of the model (thus decreasing errors in computable parameters) and adding real-world data in the form of satellite observations.

The latter has the potential to increase the accuracy of non-computable parameters, such as low-lying clouds, which have long been an aspect of climate modeling prone to error. CLIMA, then, will be a model that combines several unique features, including the use of over a decade of data culled via satellite observation to help get a handle on these non-computable parameters. This data will come courtesy of JPL and the A-train, a group of several satellites that follow each other closely along the same orbital track and cross the equator at about 13:30 local solar time every day, taking various instrument readings.

Schneider and colleagues plan to develop CLIMA in such a way that, over time, it will be able to use “data-assimilation and machine learning tools to ‘teach’ the model to improve itself in real time, harnessing both Earth observations as well as the nested high-resolution simulations” with help from a machine learning component, which will be created by a team at Caltech. Accessing data from the A-train satellite formation will give CLIMA over a decade of “nearly simultaneous measurements” of variables including cloud and sea ice cover, humidity, and temperature. The CliMA team feels that this data has much to offer when it comes to teaching current parameterization schemes; additionally, it can be supplemented and validated with detailed local observations from the ground and from field studies utilized in testing the accuracy of parameterizations within the ESM.

In order to achieve such a bold undertaking in five years, Schneider and his colleagues knew they would need experts capable of working within a large consortium of international researchers on a project spanning several major research institutions. Knowing that it would take a uniquely talented group to accomplish this, Schneider assembled a hand-picked, multi-campus team that included NPS professor of Applied Mathematics Frank Giraldo as the PI for the group tasked with the development of the CLIMA atmospheric component.

Aware that he would need elite numerical analysts to accomplish this, Giraldo approached fellow NPS professors of Applied Mathematics Jeremy Kozdon and Lucas Wilcox. The timing was fortuitous, as the three had been looking to collaborate on “something special,” according to Giraldo. With CLIMA, the three experts in scientific computing are poised to break new ground in the world of ESMs as the architects of the code at the heart of a model that promises a number of firsts.

ESMs: CURRENT MODELS AND OPPORTUNITIES FOR IMPROVEMENT

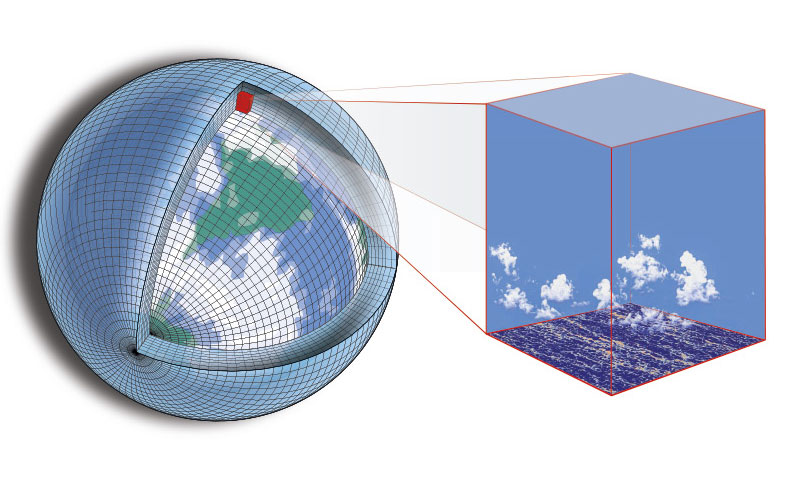

In order to understand the groundbreaking nature of both CLIMA and the NPS team’s contribution to the model, it is useful to know how most ESMs work. Current climate models work by dividing the globe into a grid and computing processes that occur in each of these sectors and how these sectors interact with one another. The accuracy of these models is highly dependent on the size of the grid’s sectors, as these determine the resolution at which the model is able to view the Earth. Generally, these sectors cannot be any smaller than tens of kilometers per side, due to the limitations of currently available computer processing power. An unfortunate result of these limitations is that existing models have grid sectors too large – and thus resolutions too low – to accurately capture some small-scale features that ultimately have large-scale impacts on the various systems that make up the model.

In order to represent these subgrid-scale (SGS) processes (such as clouds and turbulence), ESMs rely on parameterization schemes. A key component of ESMs, the parameterization of physical processes within the model helps account for atmospheric processes that cannot be directly represented within the ESM. Reasons for parameterization include instances where computational expense is too high (due to the complexity or small scale of various processes) and cases wherein scientists lack sufficient knowledge of how a particular process works to represent it mathematically in the explicit manner the model requires. Common parameterizations in numerical weather and climate prediction include cloud microphysics, convection, turbulence/boundary-layer, radiation, stochastic, and cloud-cover/cloudiness parameterizations.

These schemes are generally developed independently of the ESM into which they will be integrated, tested utilizing observations (conducted at limited locations), and then manually adjusted in a process commonly referred to as “tuning.” Since this tuning is conducted utilizing only a portion of available data, it can lead to inefficiency and compensating errors in the components of the model in which it is used. Consequently, current parameterization schemes often contain large uncertainties when it comes to SGS processes, as do any parts of the ESM that rely upon the data provided or affected by these schemes.

Since various parameterizations are typically developed independently from each other (and from the ESM they will be integrated into), their interaction within the overall ESM opens up further potential problems when it comes to the accuracy of these models. For instance, the longer time periods that characterize ESMs (these can range from hundreds to thousands of years) are more drastically affected by small errors that occur in the representation of these processes than are numerical weather prediction models, which need only be run for shorter periods of time (typically seven to ten days). While tuning may address this issue to some degree, this process is not consistent or uniformly practiced, and often varies between practitioners and models.

An artist's representation of the way climate models parse the globe into a grid. (Illustration courtesy Caltech)

Among these parameterizations is one of the aspects of ESMs that introduces some of the greatest uncertainty into current models: the parameterization of clouds. Key to this parameterization (and to the equations of ESMs in general) are the Navier-Stokes equations of fluid motion, a set of equations that capture the temperature, pressure, speed, and density of the water in the ocean and the gases in the atmosphere. As an additional set of forcing that must be added to the ESM, yet are not ruled by the Navier-Stokes equations that govern so many of the models’ other dynamics, clouds introduce several layers of difficulty when it comes time to program computer climate models. NPS team leader Frank Giraldo estimates that current models produce clouds at a factor of two less than what exists in reality. This discrepancy has obvious implications when it comes to a model’s accuracy, and – as those charged with CLIMA’s atmospheric component– the NPS team aims to improve this and will help create a model with an accuracy unmatched by existing ESMs.

A MOVE TOWARD MORE ACCURATE MODELING

As experts in scientific computing, Giraldo, Kozdon and Wilcox bring the benefits of their unique training and skill sets to the table as they work to design CLIMA’s atmospheric component. Coming from varied backgrounds, including training and work in finite difference methods and discontinuous Galerkin methods, these three applied mathematicians cite their diverse training as a key to their success, both in their work at NPS and as they design the code that will drive CLIMA’s atmospheric component. The way they will approach the equations at the heart of these codes is one of the aspects of CLIMA that will be wholly unique, as the NPS team plans to use a new numerical scheme for their component—one that, while it has been used on other flow problems—has not been used working with weather and atmospheric problems (with the exception of the Nonhydrostatic Unified Model of the Atmosphere [NUMA], a weather prediction model designed for the U.S. Navy by Giraldo).

While most current ESMs use low order finite volume or high order spectral methods to help solve the partial differential equations at the heart of the models’ parameterizations, the NPS team will use cutting edge numerics in the form of discontinuous Galerkin (DG) methods. This use of DG methods will allow for the introduction of higher order polynomials into CLIMA’s atmospheric component, which in turn will offer better accuracy. In addition to DG methods, the team’s use of entropy stability will allow them to not have to add artificial viscosity to the equations, a practice done solely to stabilize the model and known as “regularization.” According to Giraldo, regularization is currently used by all weather and climate centers, regardless of what mathematical methods their models employ, making the NPS team the first team to find a way to stabilize these equations without using it within their component of the ESM.

Taken together with their use of state-of-the-art numerics, this puts Giraldo, Kozdon and Wilcox at the cutting edge of work being done across all areas of numerical methods, not just weather and climate. It also positions the parameterizations that drive their atmospheric component to be dramatically more accurate without sacrificing stability in the model, a frequent concern cited regarding the introduction of higher order polynomials into ESMs. By utilizing entropy stable high order discontinuous Galerkin methods Giraldo estimates that even a “modest order in the increase in polynomial order (say 4th order)” will give CLIMA a boost in accuracy in comparison with existing ESMs.

The math behind the code, however, is only one portion of NPS’ contribution to CLIMA. The code that Giraldo, Kozdon and Wilcox are working on will be a lynchpin of CLIMA, as the teams at MIT and Caltech have expressed interest in the possibility of using the code developed at NPS as a basis for their own portions of CLIMA. In the case of MIT, this will be CLIMA’s ocean component, while Caltech is working on a component for atmospheric processes. According to Giraldo, the idea is that the NPS team will build their portion of CLIMA – the atmospheric component – in such a way that it can run on any hardware. The MIT team could then leverage this software, using it to build a new ocean component on top of the NPS atmospheric component, and the Caltech team could build its physical parameterizations based on the NPS component as well. As such, the various components of the overall model would have the same numerics, and use the same computer repositories and code throughout. This would make the model both extremely consistent and extremely rare, according to Giraldo, as most ESMs are written by many different designers using various computer languages and assembled after the fact.

JULIA: A NEW LANGUAGE FOR A NEW MODEL

Since most current ESMs have been around for decades, many are written in Fortran, a programming language developed by IBM in the 1950s. As such, the code is written more like a human language and must be translated into machine language in order to more efficiently run climate models programmed using this language. Current ESMs typically use Fortran and C in order to accomplish this, as well as to run models quickly on the supercomputers required for this task.

A high-level programming language newer than Fortran and more widely used by today’s programmers, Python might appear the obvious choice for a new climate model. Coding in "pure Python," however, yields results too slow to be considered efficient, and Wilcox understood that this would mean writing CLIMA's code in Python plus something else - C, CUDA or OpenCL - with the critical performance aspects crafted in the additional (non-Python) code in order to achieve faster run times. With CLIMA’s accelerated timeline for completion and new information introduced by Kozdon’s recent teaching experiences on their minds, Wilcox and Kozdon contemplated alternatives for the task at hand.

Seeing an opportunity to streamline the project, Wilcox and Kozdon suggested using Julia, an up-and-coming programming language developed by a team at MIT. Julia eliminates the two-language problem in computing, meaning that the NPS team is able to prototype and run their code utilizing one programming language. This saves both time and expense when compared to previous ESMs, which were prototyped in one language and then run in a faster one.

Giraldo agreed with Wilcox and Kozdon, noting that despite its ease of use and ability to handle a variety of applications, Python is not the best choice for industrial applications like the ones that he and the NPS team are designing for CLIMA. In his estimation, Julia promises an elegance and speed for these large systems that Python simply cannot match. Giraldo finds added benefit in how Julia’s design reflects its designers’ knowledge of the fact that computer languages and hardware are changing in significant ways, and he sees Julia as a language that can both run smoothly on existing systems and still adapt to the changing hardware needs of the components that make up CLIMA, which the group hopes to run on the next iteration of supercomputers—the so-called exascale computers (capable of at least one exaflops, or a billion billion [i.e. a quintillion] calculations per second).

“That’s been our goal for a long time, and we know how to make our algorithms work on any kind of computer architecture,” Giraldo stressed. “Those [other] climate models can’t do that; they only run on a certain kind of hardware, and that hardware might be obsolete in five years.”

In October, the NPS team brought sample portions of their code (written in Julia) to the rest of the CliMA group as part of a “bake-off,” so that the group could weigh in on what language the model’s code would be written in. For their demonstration, Giraldo, Kozdon and Wilcox created “a small number of lines of code” with which they were able to run Navier-Stokes equations in a 3D box on both central processing units (CPUs) and graphics processing units (GPUs). After showcasing how Julia gave them the ability to program these notoriously complex equations (equations integral to the operation of all ESMs) while using cutting edge numerics and eliminating the two-language problem, the NPS team received the go-ahead to proceed with Wilcox and Kozdon’s recommendation and began programming the model’s atmospheric component using Julia.

Julia’s success at the bake-off, however, was not limited to its selection for use in CLIMA. After the NPS team’s demonstration, they were contacted by members of the Julia Lab at MIT who saw an opportunity for collaboration: While the NPS team’s demonstration code was accurate and fast, the lab’s researchers saw the possibility for changes that might make Julia’s code even more intuitive to use for scientists and mathematicians. As a result of the CliMA team’s proposed use of Julia, the Julia Lab was inspired to begin research on how modifications could be made so that math could be directly written, and Julia would perform the work behind the scenes to make the math run efficiently on the computer. This would allow for a performant code that could be written in ways that scientists who are not programmers could both read and edit, ultimately contributing to Wilcox and Kozdon’s goal of producing code that is both a useful tool and accessible to diverse communities of researchers.

For Kozdon and Wilcox, Julia offers additional benefits for the larger NPS community they interact with—especially their students. As an open source language designed for high-performance computing, Julia can be downloaded and used by the public, free of charge. With access to online communities and tutorials available to all, Julia’s popularity has rapidly increased in the time since its official release in August 2018. The fact that Julia is free to everyone, combined with its ease of use, makes it a valuable option for students who are interested in learning programs that they can afford to access after completing their time at NPS (after which most lose free or reduced-price access to more expensive proprietary languages). Both Kozdon and Wilcox have worked with students interested in the possibilities of Julia, and Wilcox believes that enthusiasm about this new language is one aspect of CLIMA that has the power to attract exciting new collaborations with students and postdoctoral researchers, moving forward.

LOOKING FORWARD AT NPS

CLIMA is already creating a lot of buzz for the NPS team, with other campus users of codes similar to those the team is developing for the model—especially those who work in the disciplines of meteorology and oceanography—watching with interest, eager to use the system the NPS team is helping to design in their own research, once it is up and running. Meanwhile, Giraldo continues to receive daily emails from researchers around the globe interested in joining the NPS arm of the project. Soon, the team will be expanding to include postdoctoral researchers dedicated to the design and implementation of CLIMA’s atmospheric component. These researchers will bring their expertise to NPS for the purposes of idea exchange and technological innovation, working alongside Giraldo, Kozdon, and Wilcox as they design the building blocks of what promises to be a dramatically more accurate climate model than ESMs currently in use.

The NPS team knows that providing an ESM with the vastly improved accuracy projected by CliMA has potentially profound implications for both the NPS community, and the world at large. More accurate climate modeling is key to preparation and the proactive protection of both naval and other military assets, especially when it comes to the planning and accurate placement of seawall and other protective measures in the face of rising sea levels, and the team is anxious to provide policy makers with the most accurate, up-to-date modeling possible.

Beyond this, the NPS team is contributing to a scientific endeavor that may change the development and workings of ESMs for the foreseeable future—a rare and rewarding opportunity in the practice of science. While their immediate future will be dominated by the daunting challenge of developing a novel atmospheric component for the model in a compressed timeline, Wilcox sees a variety of measures for the team’s success after the close of the project: “[If] this code lives on, beyond us, and beyond our initial funding, and gets used by somebody else … if we write code, it’s robust, it gets transferred to the scientists, they find it useful … they’re able to get important results, they’re able to get results that are interesting to the policy makers … Because, I mean, we can sit around and make codes and new methods, but what’s really cool is when they get used by someone beyond yourself.”